Simultaneous localization and mapping (SLAM) robotics techniques: a possible application in surgery

Introduction

Simultaneous localization and mapping (SLAM) is the process by which a mobile robot can construct a map of an unknown environment and simultaneously compute its location using the map (1). SLAM has been formulated and solved as a theoretical problem in many different forms. It has been implemented in several domains from indoor to outdoor, and the possibility of combining robotic in surgery issues has captured the attention of the medical community. The common point is that the accuracy of the navigation affects the success and the results of a task, independently from application field. Since its beginning, the SLAM problem has been developed and optimized in different ways. There are three main paradigms: Kalman filters (KF), particle filters and graph-based SLAM. The first two are also referred as filtering techniques, where the position and map estimates are augmented and refined by incorporating new measurements when they become available. Due to their incremental nature, these approaches are generally acknowledged as on-line SLAM techniques. Conversely, graph-based SLAM estimates the entire trajectory and the map from the full set of measurements and it is called full SLAM problem.

The choice of the type of algorithm to use depends on the peculiarities of the application and on many factors, such as the desired map resolution, the update time, the nature of the environment, the type of sensor the robot is equipped with, and so on.

This article aims to introduce the state of the art of SLAM techniques in section II and present in section III a focus on SLAM applications in medical fields. Section IV draws conclusions on the benefits of employing these techniques in surgery.

State of the art SLAM techniques

KF techniques

Smith et al. (2) were the first to present the idea of representing the structure of the navigation area in a discrete-time state-space framework, introducing the concept of stochastic map. As KF original algorithm relies on the assumption of linearity, that is rarely fulfilled, two variations are mainly employed from then: extended KF (EKF) and information filtering (IF). The EKF overcomes the linearity assumption describing the next state probability and the measurement probabilities by nonlinear functions (3). In literature, there exist several examples of the use of the EKF algorithm (4-10) and it has been the basis of many recent developments in the field (11,12).

The unscented KF (UKF) has been developed in recent years to overcome some main problems of the EKF (13). It approximates the state distribution with a Gaussian Random Variable, like in EKF, but here it is represented using a minimal set of carefully chosen sample points, called σ-points. When propagated through the nonlinear system, they capture the posterior mean and covariance accurately to the 3rd order of the Taylor series for any nonlinearity (14). Some examples of the use of UKF for navigation and localization can be found in (15-17).

The dual of the KF is the information filter, that relies on the same assumptions but the key difference arises in the way the Gaussian belief is represented. The estimated covariance and estimated state are replaced by the information matrix and information vector respectively. It brings to several advantages over the KF: the data is filtered by simply summing the information matrices and vector, providing more accurate estimates (18); the information filter tends to be numerically more stable in many applications (3). The KF is more advantageous in the prediction step because the update step is additive while UKF involves the inversion of two matrices, which means an increase of computational complexity with a high-dimension state space. Anyway, these roles are reversed in the measurement step, illustrating the dual character of Kalman and information filters.

Thrun et al. (18), from the observation that the normalized information matrix is sparse, developed the sparse extended information filter (SEIF), a variant of the EIF, that consists in an approximation which maintains a sparse representation of environmental dependencies to achieve a constant time updating. They were inspired by other works on SLAM filters that represent relative distances (19-22) but none of them are able to perform a constant time updating.

To overcome the difficulties of both EKF and IF, and to be more efficient in terms of computational complexity, a combined kalman-information filter SLAM algorithm (CF-SLAM) has been adopted in (23). It is a combination of EKF and EIF that allows to execute highly efficient SLAM in large environments.

Particle filters techniques

Particle filters (13,24,25) comprise a large family of sequential Monte Carlo algorithms (26,27); the posterior is represented by a set of random state samples, called particles. Almost any probabilistic robot model that presents a Markov chain formulation can be suitable for their application. Their accuracy increases with the available computational resource, so it doesn’t require a fixed computation time. They are also relatively easy to implement: they do not need to linearize non-linear models and do not worry about closed-form solutions of the conditional probability as in KF. The poor performance in higher dimensional spaces is their main limitation. Rao-Blackwellized particle filters (24,28-30) lead to more efficient solutions, also to the data association problem, but these algorithms are susceptible to considerable estimation inconsistencies because they generally underestimate their own error (31). The need of increasing the consistency of estimation, together with the problem of heterogeneity of the trajectory samples, brought to the adoption of different sampling strategies (32-34).

FastSLAM (24,35,36) denotes a family of algorithms that integrates particle filters and EKF. It exploits the fact that the features estimates are conditional independent given the observations, the controls, and the robot path. This implies that the mapping problem can be split into separate problems, one for each feature in the map, considering that also the single map errors are independent. FastSLAM uses particle filters for estimating the robot path and, for each particle, uses the EKF for estimating feature locations, offering computational advantages over plain EKF implementations and well coping with non-linear robot motion models. However, the particle approximation doesn’t converge uniformly in time due to the presence of the map in the state space, which is a static parameter (37).

Expectation maximization (EM) technique and improvement with missing or hidden data

The EM (38) is an efficient iterative procedure to compute parameter estimation in probabilistic models with missing or hidden data. Each iteration consists of two processes: the expectation, or E-step, estimating the missing data given the current model and the observed data; the M-step, which computes parameters maximizing the expected log-likelihood found on the E-step. The estimate of the missing data from the E-step are used in place of the actual missing data. The algorithm guarantees the convergence to a local maximum of the objective function. A real-time implementation of this algorithm is described in (39).

Since it requires the whole data being available at each iteration. an online version has been implemented (40), where there is no need to store the data since they are used sequentially. This algorithm has been used also to relax the assumption that the environment in many SLAM problems is static. Most of the existing methods are robust for mapping environments that are static, structured, and limited in size, while mapping unstructured, dynamic, or large-scale environments remains an open research problem. In literature, there are mainly two directions: partitioning the model into two maps, one holding only the static landmarks and the other holding the dynamic landmarks (41,42), or trying to track moving objects while mapping the static landmarks (43,44).

Graph-based SLAM techniques

Graph-based SLAM addresses the SLAM problem adopting a graphical formulation, which means building a graph whose nodes represent robot poses or landmarks, linked by soft constraints established by sensor measurements (45); this phase is called front-end. The back-end consists in correcting the robot poses with the goal of getting a consistent map of the environment given the constraints. The critical point concerns the configuration of the nodes: to be maximally consistent with the measurements, a large error minimization problem should be solved.

This technique has been firstly introduced by Lu and Milios (22). Bosse et al. (46) developed the ATLAS framework, which integrates global and local mapping using multiple connected local maps, circumscribing the error representation to local areas and adopting topological methods to provide a global map managing local submaps. Similarly, Estrada et al. proposed Hierarchical SLAM (47) as a technique for using independent local maps and the work of Nüchter et al. (48) aims at building an integrated SLAM system for 3D mapping.

Gutmann and Konolige (49) proposed a powerful approach to combine the network construction and loop closures detection while running an incremental estimation algorithm. Some authors (50-52) applied gradient descent to optimize the SLAM problem. Konolige (53) and Montemerlo and Thrun (54) introduced conjugate gradient into the field of SLAM, which is more efficient than gradient descent. GraphSLAM (55) reduces the dimensionality of the optimization problem through a variable elimination technique. The nonlinear constraints are linearized and the resulting least squares problem is solved using standard optimization techniques.

Visual SLAM

A distinct paragraph has been dedicated to visual SLAM, since the optical sensors are always more employed in robotics applications and specifically in medical surgery. Most vision-based systems in SLAM problems are monocular and stereo, although those based on trinocular configurations also exist (56). Monocular cameras are quite widely used (57-64) but the types of camera are various. Large-scale direct monocular SLAM (65) uses only RGB images from a monocular camera as information about the environment and sequentially builds topological map. Omnidirectional cameras are gaining popularity: they have a 360° view of the environment and given that the features stay longer in the field of view, it is easier to find and track them (66,67). To improve the accuracy of the features, some works rely on a multi-sensor system. The system of Castellanos et al. (68) consists of a 2D laser scanner and a camera, implementing an EFK-SLAM algorithm. Other examples of the use of EKF in visual SLAM can be found in (69,70).

However, a monocular system shows some weaknesses in certain situations, e.g., it requires extra computation for depth estimates, scale propagation problems or can lead to failure modes due to non-observability.

Stereo systems are hugely adopted in different environments, for both landmark detection and motion estimation (71-73) in indoor (74-79) and outdoor environments (80-82). The adoption of particle filter algorithms with stereo vision system have been analysed in different works (83-86), but it is not the unique technique exploited. Schleicher et al. (87), for example, applied a top-down Bayesian method on the images coming from a wide-angle stereo camera to identify and localize natural landmarks. Lemaire et al. (88) discusses about two vision-based SLAM strategies where 3D points are used as landmarks: one relies on stereovision, where the landmark positions are fully observed from a single location; the other on a bearing-only approach implemented on monocular sequences. There have been also many successful approaches to the visual SLAM problem using the RGB-D sensor to exploit the 3D point clouds provided (89,90).

Most of the visual SLAM systems make use of algorithms from the computer vision, in particular the Structure from Motion (SfM). Nowadays, thanks to high performance computers, techniques such as bundle adjustment (BA) (91) are producing a great interest in the robotics community, considering that their sparse representations can enhance performance over the EKF. The first BA real time application is imputed to Mouragnon et al. (92), with the work on the visual odometry, followed by the parallel tracking and mapping (PTAM) system of Klein and Murray (93). In ORB-SLAM (94), thanks to the covisibility graph, tracking and mapping are considered in a local covisible area, independent of global map size.

Surgical SLAM

In this section, we will focus on the surgery SLAM applications. In medicine areas like assistance, rehabilitation and surgery there are several examples of devices and algorithms typical of robotics, from which they can benefit: special robot manipulators for surgery, control algorithms for tele-operation and cognitive algorithms for decision learning are just a few of them (95).

Medical Surgical Systems provide innovative products, which have had a profound effect on the performance and welfare of health care professionals. Robotic technologies have been developed to help the surgeons work and to allow optimal and accurate results, without the necessity of being in the same location of the patient. They are employed for different types of surgeries: from cardiac and open-heart surgery to prostate surgery, hysterectomies, joint replacements and kidney surgeries.

Da Vinci surgical platform (Intuitive Surgical Inc., Sunnyvale, CA, USA) represents a well-known system for minimally invasive surgery, which evolved from its first release. It consists of three main components: the surgical console, the side robotic cart with four robotic arms that can be manipulated by the surgeon from the console, and a high-definition 3D vision system. The surgical console is the main controller of the system, through which the surgeon manages the surgical instruments mounted on three of the arms of the robotic cart. In accordance with the planned procedure, different instruments can be attached. The 4th robotic arm is dedicated to the camera control. The doctor has the benefit of viewing a 3D video image of the procedure being carried out while the robotic arms compute the movements of his hands. Another example is the Sensei X (Hansen Medical Inc., Mountain View, CA, USA), a medical robot designed for performing complex cardiac arrhythmia operations using a flexible catheter with greater stability and control (96-98). Another commercial robotic tool for surgical applications is Navio PFS TM (Blue Belt Technologies Inc., Pittsburgh, PA, USA). This handheld device comprises a planning and navigation platform with precise bone preparation and dynamic soft tissue balancing.

A plenty of other systems exist, like the DLR MIRO, MiroSurge project, and a large part of these studies was conducted on cardiac field. The NeuroMate and NeuroArm are indeed for neurosurgery applications, like also the one introduced by Peters et al. (99). All these systems provide improved abilities in diagnosis and less invasive but more precise procedures. Furthermore, robots can reduce doctors’ strain and fatigue during surgeries lasting for hours. Image-guided surgery systems are the most adopted since the surgeon has the possibility of observing an operation from different viewpoints, allowing him to take the best decision on how to proceed. Since they include always a tracking device integrated with a surgical tool, the doctor is able to know the robot position related to some targets in the patient’s body and can thus decide where to guide it. One of the most famous image-guidance system is the Ensite-NavX (St Jude Medical, St Paul, MN, USA). used for cardiac mapping and ablation. It is able to 3-dimensionally reconstruct the electric activity and the cardiac cavities in which the operator visualizes in real-time the ablator without the presence of harmful radiations for the patient (100). Carto 3 (Bio-Sense Webster, Diamond Bar, CA, USA) is one of the most sophisticated systems on the cardiac electro-anatomical mapping, able to guide the removal of numerous arrhythmias. It allows the precise localization of the ablator thanks to three ultra-low electromagnetic fields. The anatomical structure of the heart is reconstructed through the contact of the ablator with the endocardial surface and for each point in the map an electric signal is registered (101,102). It has been shown clinically that these image-guidance and electro-anatomical mapping systems can reduce a doctor’s reliance on radiating fluoroscopy (103).

In (104) and (105) other two examples of image-guidance systems that use an electromagnetic tracker registered with preoperative images are presented. Zhong et al. (106) illustrated an automatic registration method based on the Iterative Closest point algorithm to align EM tracker measurements with preoperative images, but it requires long time (on the order of 40 minutes) to complete (107). In (108) authors proposed a software package to create custom image-guidance solutions.

Filtering for surgical applications

It is possible to find the filtering technique in various works. In (109) the pose of a stereoscope is jointly calculated with the recovery of the 3D positions of features detected in images by means of a EKF algorithm. Grasa et al. (110) also implemented an EKF estimator for a monocular SLAM approach on real sequences of endoscope images. Similarly, in (111) a CCD camera mounted on a fiberscope has been employed to reconstruct its motion and the 3D scene in which the surgery is going on. EKF is not the unique technique; some authors tried to implement an unscented particle filter to find the location of an intracardiac echo ultrasound catheter using the measurements coming from the instrument itself (112). The algorithm doesn’t need a prior estimate of the registration: it compares 21 live ultrasound images with the expected image for each particle in the filter during the update step. The authors demonstrated the convergence of the algorithm in about 30 seconds.

SLAM clinical benefits

Significant tissue deformation prohibits precise registration and fusion of pre- and intra-operative data in minimally invasive surgeries, mostly like cardiac, gastrointestinal, or abdominal ones. If manageable tissue motion is present, image-guided surgeries are demonstrated to be effective and with many advantages. Nowadays, vision based techniques such as SfM and visual SLAM, are considerably spreading due to their capabilities of recovering 3D structure and laparoscope motion. They have been exploited in many anatomical settings such as the abdomen (109,113), colon (114), bladder (115) and sinus (116), but the assumption of a static structure is required. In fact, it is a recurrent hypothesis within the research in this area but most surgical procedures cannot accomplish with it (117). SfM has been theorized for being used in non-rigid environments but the requirement of offline batch processing makes difficult its application for real-time uses. In (118), indeed, the estimated cardiac surface is considered static in a selected point, while in (119) is computed by means of tracking regions of interest in the organ. The laparoscopic camera is assumed fixed, which is not realistic for in vivo applications.

SLAM can considerably enhance the performance of image-guided surgeries enabling accurate navigation due to the continuous awareness of the robot location relative to its surroundings. SLAM can surely provide a reliable and appropriate model of the operation. The recursive adjustment of the probabilistic filtering approach, the registration parameters, the surface deformation and the robot configuration at each time step allows to get the most likely solution. Moreover, surgeries desire to combine data from various tracking sensors, images, pre-operative and live information. SLAM can considerably contribute to this aspect because it was born as a sensor-fusion algorithm where information coming from different sources are collected and fused together.

Another improvement that SLAM can make to image-guided systems is to annotate surface models with motion data: in fact, SLAM can estimate the periodic motion of nearby surfaces and adding this information to image-guidance models allows surgeons to plan better the paths towards the anatomical targets thanks to a more informative graphical interface. In relation to the model displayed, it is possible also to compute the uncertainties giving thus to the doctor a feedback which is helpful to determine the aspect of the visualization system that can be trusted to guide the robot precisely. When the robot position is estimated in an infeasible pose, given preoperative models, due to the inaccuracies, like registration errors, it is possible to compute a constraint update step to move it in a feasible region, thereby producing a more accurate and reliable representation of the operation.

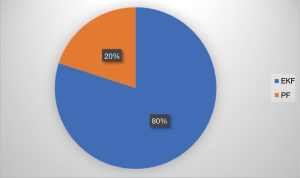

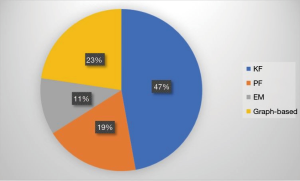

Analysing the literature taken in consideration in this paper, it is possible to notice that the most employed technique in SLAM robotics applications, independently from the specific field of application, is the KF (Figure 1). In particular, the EKF covers the biggest part, maybe due to its main advantage of providing a good quality of the estimate and it has a relatively low complexity. This is reflected also in surgery robotics applications, as illustrated in Figure 2, where almost all the works exploit the vision sensors.

Conclusions

Emerging minimally invasive technologies have been embraced by many surgical disciplines over the past few years. This brought significant advancements in SLAM research also in the medical field. In this work, firstly a review of the main techniques adopted and implemented to solve the SLAM problems has been detailed, considering any kind of environment. A distinct paragraph has been dedicated to visual SLAM, since the optical sensors are always more employed in robotics applications and specifically in medical surgery. These systems can translate a surgeon’s movements into precise real-time movements of the robotic instruments inside a patient’s body and some of advanced surgical robotic systems have been summarized. From the analysis considered in this work, all known approaches to SLAM have their own limitations, but it can be stated that the EKF is the most used.

Acknowledgments

Funding: None.

Footnote

Provenance and Peer Review: This article was commissioned by the Guest Editors (Luca Bertolaccini and Dania Nachira) for the series “Science behind Thoracic Surgery” published in Shanghai Chest. The article has undergone external peer review.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/shc.2018.01.01). The series “Science behind Thoracic Surgery” was commissioned by the editorial office without any funding or sponsorship. The authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Bailey T, Durrant-Whyte H. Simultaneous Localisation and Mapping (SLAM): Part II State of the Art.

- Smith R, Self M, Cheeseman P. A Stochastic Map for Uncertain Spatial Relationships. In: Bolles R, Roth B. editors. The Fourth International Symposium of Robotics Research, Cambridge: The MIT Press, 1988:467-74.

- Thrun S, Burgard W, Fox D. Probabilistic Robotics (Intelligent Robotics and Autonomous Agents). The MIT Press, 2005.

- Leonard J, Newman P. Consistent, convergent, and constant-time SLAM. In: Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI). Aug 09-15, 2013; Acapulco, Mexico. San Francisco, CA: Morgan Kaufmann Publishers Inc., 2003:1143-50.

- Castellanos JA, Tardós JD. Mobile Robot Localization and Map Building: A Multisensor Fusion Approach. Springer US, 1999.

- Chong KS, Kleeman L. Feature-Based Mapping in Real, Large Scale Environments Using an Ultrasonic Array. Int J Robot Res 1999;18:3-19.

- Davison AJ, Murray DW. Mobile robot localisation using active vision. In: Burkhardt H, Neumann B. editors. Computer Vision—ECCV’98. ECCV 1998. Lecture Notes in Computer Science. Berlin, Heidelberg: Springer, 1998:809-25.

- Durrant-whyte HF, Dissanayake MW, Gibbens PW. Toward Deployment of Large Scale Simultaneous Localisation and Map Building (SLAM) Systems. In: Proceedings of the Ninth International Symposium of Robotics Research. Oct 9-12; Snowbird, UT. 1999:121-7.

- Kwon YD, Lee JS. A Stochastic Map Building Method for Mobile Robot using 2-D Laser Range Finder. Autonomous Robots 1999;7:187-200. [Crossref]

- Skrzypczyński P. Simultaneous localization and mapping: A feature-based probabilistic approach. Int J Ap Mat Com-Pol 2009;19:575-88.

- Dissanayake MW, Newman P, Clark S, et al. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Transactions on Robotics and Automation 2001;17:229-41. [Crossref]

- Kotov KY, Maltsev AS, Sobolev MA. Recurrent neural network and extended Kalman filter in SLAM problem. In: Proceedings of the 3rd IFAC Conference on Intelligent Control and Automation Science ICONS. Sep 2-4, 2013; Chengdu, China. 2013:23-6.

- Julier SJ, Uhlmann JK. New extension of the Kalman filter to nonlinear systems. In: Proceedings of SPIE - The International Society for Optical Engineering. 1997;3068:182-93.

- Wan EA, van der Merwe R. The Unscented Kalman Filter. In: Haykin S. editor. Kalman Filtering and Neural Networks. New York, USA: John Wiley & Sons, Inc., 2001.

- Barisic M, Vasilijevic A, Nad D. Sigma-point Unscented Kalman Filter used for AUV navigation. In: Proceedings of the 20th Mediterranean conference on control and automation. Barcelona, Spain. 2012:1365-72.

- Karimi M, Bozorg M, Khayatian AR. A comparison of DVL/INS fusion by UKF and EKF to localize an autonomous underwater vehicle. In: Proceedings of the First RSI/ISM International Conference on Robotics and Mechatronics (ICRoM). IEEE, 2013:62-7.

- Allottaac B, Caitibc A, Costanzi R, et al. A new AUV navigation system exploiting unscented Kalman filter. Ocean Engineering 2016;113:121-32. [Crossref]

- Thrun S, Koller D, Ghahramani Z, et al. Simultaneous Mapping and Localization with Sparse Extended Information Filters: Theory and Initial Results. In: Proceedings of the Fifth International Workshop on Algorithmic Foundations of Robotics. Nice, France, 2002.

- Newman PM. Invariant filtering for simultaneous localization and mapping. PhD dissertation, University of Sydney, 2000. Available online: http://www.robots.ox.ac.uk/~mobile/Theses/pmnthesis.pdf

- Deans MC, Hebert M. Invariant Filtering for Simultaneous Localization and Mapping. In: Proceedings of the IEEE International Conference on Robotics and Automation. 2000:1042-7.

- Csorba M. Simultaneous Localization and Map Building. PhD dissertation, University of Oxford, 1997.

- Lu F, Milios E. Globally Consistent Range Scan Alignment for Environment Mapping. Autonomous Robots 1997;4:333-49. [Crossref]

- Cadena C, Neira J. SLAM in O(logn) with the Combined Kalman-Information Filter. Rob Auton Syst 2010;58:1207-19. [Crossref]

- Hahnel D, Burgard W, Fox D, et al. An efficient fastSLAM algorithm for generating maps of large-scale cyclic environments from raw laser range measurements. In: Proceeding of the IEEE/RSJ International Conference on Intelligent Robots and Systems. Oct 27-31, 2003; Las Vegas, NV, United States. 2003:206-11.

- Moreno L, Garrido S, Blanco D, et al. Differential Evolution solution to the SLAM problem. Rob Auton Syst 2009;57:441-50. [Crossref]

- Liu JS, Chen R. Sequential Monte Carlo Methods for Dynamic Systems. J Am Stat Assoc 1998;93:1032-44. [Crossref]

- Doucet A, de Freitas N, Gordon N. editors. Sequential Monte Carlo Methods in Practice. 1st edition. New York: Springer, 2001.

- Doucet A, de Freitas N, Murphy K, et al. Rao-Blackwellised Particle Filtering for Dynamic Bayesian Networks. In: Proceedings of the 16th Conference on Uncertainty in Artificial Intelligence. Jun 30-Jul 03, 2000; San Francisco, CA, USA. Morgan Kaufmann Publishers Inc., 2000:176-83.

- Montemerlo M, Thrun S, Whittaker W. Conditional particle filters for simultaneous mobile robot localization and people-tracking. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). May 11-15; Washington, DC, USA. 2002:695-701.

- Murphy KP. Bayesian map learning in dynamic environments. In: Proceedings of the 12th International Conference on Neural Information Processing Systems. Nov 29-Dec 04, 1999; Denver, CO. MA, USA. MIT Press Cambridge, 1999:1015-21.

- Bailey T, Nieto J, Nebot E. Consistency of the FastSLAM algorithm. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). May 15-19, 2006; Orlando, FL, USA. IEEE, 2006:424-9.

- Beevers KR, Huang WH. Fixed-lag Sampling Strategies for Particle Filtering SLAM. In: Proceedings of the IEEE International Conference on Robotics and Automation. Apr 10-14, 2007; Roma, Italy. IEEE, 2007:2433-8.

- Doucet A, Briers M, Sénécal S. Efficient Block Sampling Strategies for Sequential Monte Carlo Methods. J Comput Graph Stat 2006;15:693-711. [Crossref]

- Gilks WR, Berzuini C. Following a moving target—Monte Carlo inference for dynamic Bayesian models. J R Stat Soc Series B Stat Methodol 2001;63:127-46. [Crossref]

- Montemerlo M, Thrun S, Koller D, et al. FastSLAM: A Factored Solution to the Simultaneous Localization and Mapping Problem. In: Proceedings of the AAAI National Conference on Artificial Intelligence. Edmonton, Canada. 2002:593-8.

- Montemerlo M, Thrun S. Simultaneous localization and mapping with unknown data association using FastSLAM. In: Proceedings of the IEEE International Conference on Robotics and Automation. Sep 14-19, 2003; Taipei, Taiwan. IEEE, 2003:2:1985-91.

- Crisan D, Doucet A. A survey of convergence results on particle filtering methods for practitioners. IEEE Transactions on Signal Processing 2002;50:736-46. [Crossref]

- Dempster AP, Laird NM, Rubin DB. Maximum Likelihood from Incomplete Data via the EM Algorithm. J R Stat Soc Series B Stat Methodol 1977;39:1-38.

- Thrun S, Martin C, Liu Y, et al. A real-time expectation-maximization algorithm for acquiring multiplanar maps of indoor environments with mobile robots. IEEE Transactions on Robotics and Automation 2004;20:433-43. [Crossref]

- Le Corff S, Fort G, Moulines E. Online Expectation Maximization algorithm to solve the SLAM problem. Statistical Signal Processing Workshop (SSP). Jun 28-30, 2011; Nice, France. IEEE, 2011:225-8.

- Hahnel D, Schulz D, Burgard W. Map building with mobile robots in populated environments. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems. Sep 30-Oct 04, 2002; Lausanne, Switzerland. IEEE, 2002:496-501.

- Hahnel D, Triebel R, Burgard W. Map building with mobile robots in dynamic environments. In: Proceedings of the IEEE International Conference on Robotics and Automation. Sep 14-19, 2003; Taipei, Taiwan. IEEE, 2003:1557-63.

- Bibby C, Reid I. Simultaneous Localisation and Mapping in Dynamic Environments {(SLAMIDE)} with Reversible Data Association. In: Proceedings of Robotics: Science and Systems Conference. Atlanta, 2007.

- Rogers JG, Trevor AJ, Nieto-Granda C, et al. SLAM with Expectation Maximization for moveable object tracking. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Oct 18-22, 2010; Taipei, Taiwan. IEEE, 2010.

- Konolige K, Bowman J, Chen JD, et al. View-based maps. Int J Rob Res 2010;29:941-57. [Crossref]

- Bosse M, Newman P, Leonard J, et al. An Atlas framework for scalable mapping. In: Proceedings of the IEEE International Conference on Robotics and Automation. Sep 14-19, 2003; Taipei, Taiwan. IEEE, 2003:1899-906.

- Estrada C, Neira J, Tardos JD. Hierarchical SLAM: real-time accurate mapping of large environments. IEEE Transactions on Robotics 2005;21:588-96. [Crossref]

- Nüchter A, Lingemann K, Hertzberg J, et al. 6D SLAM with approximate data association. In: Proceeding of 12th International Conference on Advanced Robotics. Jul 18-20, 2005; Seattle, WA, USA. IEEE, 2005:242-9.

- Gutmann JS, Konolige K. Incremental mapping of large cyclic environments. In: Proceedings of the IEEE International Symposium on Computational Intelligence in Robotics and Automation (CIRA). Nov 08-09, 1999; Monterey, CA, USA. IEEE, 2002:318-25.

- Folkesson J, Christensen H. Graphical SLAM - a self-correcting map. In: Proceedings of the IEEE International Conference on Robotics and Automation. Apr 26-May 01, 2004; New Orleans, LA, USA. IEEE, 2004:383-90.

- Folkesson JB, Christensen HI. Robust SLAM. 5th Symposium on Intelligent Autonomous Vehicles. Jul 05-07, 2004; Lisboa, Portugal. Elsevier, 2004;37:722-7.

- Olson E, Leonard J, Teller S. Fast iterative alignment of pose graphs with poor initial estimates. IEEE International Conference on Robotics and Automation. May 15-19, 2006; Orlando, FL, USA. IEEE, 2006:2262-9.

- Konolige K. Large-scale map-making. In: Proceedings of the 19th national conference on Artifical intelligence. Jul 25-29, 2004; San Jose, California. AAAI Press, 2004:457-63.

- Montemerlo M, Thrun S. Large-Scale Robotic 3-D Mapping of Urban Structures. In: Ang MH, Khatib O. editors. Experimental Robotics IX. Springer Tracts in Advanced Robotics. Berlin, Heidelberg: Springer, 2006:141-50.

- Thrun S, Montemerlo M. The Graph SLAM Algorithm with Applications to Large-Scale Mapping of Urban Structures. Int J Rob Res 2006;25:403-29. [Crossref]

- Se S, Lowe DG, Little JJ. Vision-based global localization and mapping for mobile robots. IEEE Transactions on Robotics 2005;21:364-75. [Crossref]

- Bonin-Font F, Ortiz A, Oliver G. Visual Navigation for Mobile Robots: A Survey. J Intell Robot Syst 2008;53:263-96. [Crossref]

- Burschka D, Hager GD. V-GPS(SLAM): vision-based inertial system for mobile robots. In: Proceedings of the IEEE International Conference on Robotics and Automation. Apr 26-May 01, 2004; New Orleans, LA, USA. IEEE, 2004.

- Lothe P, Bourgeois S, Dekeyser F, et al. Monocular SLAM Reconstructions and 3D City Models: Towards a Deep Consistency. In: Ranchordas A, Pereira JM, Araújo HJ, et al. editors. Computer Vision, Imaging and Computer Graphics. Theory and Applications. VISIGRAPP 2009. Communications in Computer and Information Science. Berlin, Heidelberg: Springer, 2010:201-14.

- Cumani A, Denasi S, Guiducci A, et al. Integrating Monocular Vision and Odometry for SLAM. WSEAS Transactions on Computers 2004;3:625-30.

- Bleser G, Becker M, Stricker D. Real-time vision-based tracking and reconstruction. J Real Time Image Process 2007;2:161-75. [Crossref]

- Montiel JM, Civera J, Davison AJ. Unified Inverse Depth Parametrization for Monocular SLAM. In: Proceedings of Robotics: Science and Systems. Philadelphia, USA, 2006.

- Davison AJ, Reid ID, Molton ND, et al. MonoSLAM: Real-Time Single Camera SLAM. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2007;29:1052-67. [Crossref] [PubMed]

- Pinies P, Lupton T, Sukkarieh S, et al. Inertial Aiding of Inverse Depth SLAM using a Monocular Camera. IEEE International Conference on Robotics and Automation. Apr 10-14, 2007; Roma, Italy. IEEE, 2007:2797-802.

- Engel J, Schöps T, Cremers D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In: Fleet D, Pajdla T, Schiele B, et al. editors. Computer Vision – ECCV 2014. ECCV 2014. Lecture Notes in Computer Science, vol 8690. Cham: Springer, 2014.

- Caruso D, Engel J, Cremers D. Large-scale direct SLAM for omnidirectional cameras. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Sep 28-Oct 02, 2015; Hamburg, Germany. IEEE, 2015.

- Gutierrez D, Rituerto A, Montiel JM, et al. Adapting a real-time monocular visual SLAM from conventional to omnidirectional cameras. 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops). Nov 06-13, 2011; Barcelona, Spain. IEEE, 2011:343-50.

- Castellanos JA, Montiel JM, Neira J, et al. The SPmap: A Probabilistic Framework for Simultaneous Localization and Map Building (1999). IEEE Transactions on Robotics and Automation 1999;15:948-53. [Crossref]

- Davison AJ. Real-Time Simultaneous Localisation and Mapping with a Single Camera. In: Proceedings of the Ninth IEEE International Conference on Computer Vision. DC, USA: IEEE Computer Society Washington, 2003:1403-16.

- Nemra A, Aouf N. Robust Airborne 3D Visual Simultaneous Localization and Mapping with Observability and Consistency Analysis. J Intell Robot Syst 2009;55:345-76. [Crossref]

- Lacroix S, Mallet A, Jung IK, et al. Vision-based SLAM. SLAM Summer School 2006. Oxford, 2006. Available online: http://www.robots.ox.ac.uk/~SSS06/Website/index.html

- Schleicher D, Bergasa LM, Ocaña M, et al. Real-time hierarchical stereo Visual SLAM in large-scale environments. Rob Auton Syst 2010;58:991-1002. [Crossref]

- Cumani A, Denasi S, Guiducci A, et al. Robot Localisation and Mapping with Stereo Vision. WSEAS Transactions on Circuits and Systems 2004;3:2116-21.

- Atiya S, Hager GD. Real-time vision-based robot localization. IEEE Transactions on Robotics and Automation. 1993;9:785-800. [Crossref]

- Christensen HI, Kirkeby NO, Kristensen S, et al. Model-driven vision for in-door navigation. Rob Auton Syst 1994;12:199-207. [Crossref]

- Hashima M, Hasegawa F, Kanda S, et al. Localization and obstacle detection for robots for carrying food trays. In: Proceedings of the 1997 IEEE/RSJ International Conference on Intelligent Robots and Systems. Sep 11, 1997; Grenoble, France. IEEE, 1997:345-51.

- Kak AC, Andress KM, Lopez-Abadia C, et al. Hierarchical Evidence Accumulation in the Pseiki System and Experiments in Model-Driven Mobile Robot Navigation. In: Proceedings of the Fifth Annual Conference on Uncertainty in Artificial Intelligence. Amsterdam, The Netherlands: North-Holland Publishing Co., 1990;10:353-69.

- Kriegman DJ, Triendl E, Binford TO. Stereo vision and navigation in buildings for mobile robots. IEEE Transactions on Robotics and Automation 1989;5:792-803. [Crossref]

- Matthies L, Shafer S. Error modeling in stereo navigation. IEEE Journal on Robotics and Automation 1987;3:239-48. [Crossref]

- Sutherland KT, Thompson WB. Localizing in unstructured environments: dealing with the errors. IEEE Transactions on Robotics and Automation 1994;10:740-54. [Crossref]

- Matthies L, Balch T, Wilcox B. Fast optical hazard detection for planetary rovers using multiple spot laser triangulation. In: Proceedings of the 1997 IEEE International Conference on Robotics and Automation. Apr 25, 1997; Albuquerque, NM, USA. IEEE, 1997:859-66.

- Simmons R, Krotkov E, Chrisman L, et al. Experience with rover navigation for lunar-like terrains. In: Proceedings of the 1995 IEEE/RSJ International Conference on Intelligent Robots and Systems. Aug 05-09, 1995; Pittsburgh, PA, USA. IEEE, 1995:441-6.

- Sim R, Elinas P, Griffin M, et al. Design and analysis of a framework for real-time vision-based SLAM using Rao-Blackwellised particle filters. The 3rd Canadian Conference on Computer and Robot Vision. Jun 07-09, 2006; Quebec, Canada. 2006.

- Sim R, Little JJ. Autonomous vision-based exploration and mapping using hybrid maps and Rao-Blackwellised particle filters. 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems. Oct 09-15, 2006; Beijing, China. IEEE, 2006.

- Gil A, Reinoso Ó, Ballesta M, et al. Multi-robot visual SLAM using a Rao-Blackwellized particle filter. Rob Auton Syst 2010;58:68-80. [Crossref]

- Pradeep V, Medioni G, Weiland J. Visual loop closing using multi-resolution SIFT grids in metric-topological SLAM. IEEE Conference on Computer Vision and Pattern Recognition. Jun 20-25, 2009; Miami, FL, USA. IEEE, 2009:1438-45.

- Schleicher D, Bergasa LM, Barea R, et al. Real-Time Simultaneous Localization and Mapping using a Wide-Angle Stereo Camera and Adaptive Patches. 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems. Oct 09-15, 2006; Beijing, China. IEEE, 2006:55-60.

- Lemaire T, Berger C, Jung IK, et al. Vision-Based SLAM: Stereo and Monocular Approaches. Int J Comput Vis 2007;74:343-64. [Crossref]

- Mahmoud D, Salem MA, Ramadan H, et al. 3D Graph-Based Vision-SLAM Registration and Optimization. International Journal of Circuits Systems and Signal Processing 2014;8:123-30.

- Engelharda N, Endresa F, Hessa J, et al. Real-time 3D visual SLAM with a hand-held RGB-D camera. In: Proceedings of the RGB-D workshop on 3D perception in robotics at the European robotics forum. Vasteras, Sweden, 2011.

- Triggs B, McLauchlan PF, Hartley RI, et al. Bundle Adjustment — A Modern Synthesis. In: Triggs B, Zisserman A, Szeliski R. editors. Vision Algorithms: Theory and Practice. IWVA 1999. Lecture Notes in Computer Science, vol 1883. Berlin, Heidelberg: Springer, 1999:298-372.

- Mouragnon E, Lhuillier M, Dhome M, et al. Real Time Localization and 3D Reconstruction. 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Jun 17-22, 2006; New York, NY, USA. IEEE, 2006:363-70.

- Klein G, Murray D. Parallel Tracking and Mapping for Small AR Workspaces. 6th IEEE and ACM International Symposium on Mixed and Augmented Reality. Nov 13-16, 2007; Nara, Japan. IEEE, 2007:225-34.

- Mur-Artal R, Montiel JM, Tardós JD. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Transactions on Robotics 2015;31:1147-63. [Crossref]

- Cheein FA, Lopez N, Soria CM, et al. SLAM algorithm applied to robotics assistance for navigation in unknown environments. J Neuroeng Rehabil 2010;7:10. [Crossref] [PubMed]

- Malcolme-Lawes L, Kanagaratnam P. Robotic navigation and ablation. Minerva Cardioangiol 2010;58:691-9. [PubMed]

- de Ruiter QM, Moll FL, van Herwaarden JA. Current state in tracking and robotic navigation systems for application in endovascular aortic aneurysm repair. J Vasc Surg 2015;61:256-64. [Crossref] [PubMed]

- Antoniou GA, Riga CV, Mayer EK, et al. Clinical applications of robotic technology in vascular and endovascular surgery. J Vasc Surg 2011;53:493-9. [Crossref] [PubMed]

- Peters T, Davey B, Munger P, et al. Three-dimensional multimodal image-guidance for neurosurgery. IEEE Trans Med Imaging 1996;15:121-8. [Crossref] [PubMed]

- Romero J, Lupercio F, Goodman-Meza D, et al. Electroanatomic mapping systems (CARTO/EnSite NavX) vs. conventional mapping for ablation procedures in a training program. J Interv Card Electrophysiol 2016;45:71-80. [Crossref] [PubMed]

- Ernst S. Magnetic and robotic navigation for catheter ablation: "joystick ablation J Interv Card Electrophysiol 2008;23:41-4. [Crossref] [PubMed]

- Ernst S. Robotic approach to catheter ablation. Curr Opin Cardiol 2008;23:28-31. [Crossref] [PubMed]

- Earley MJ, Showkathali R, Alzetani M, et al. Radiofrequency ablation of arrhythmias guided by non-fluoroscopic catheter location: a prospective randomized trial. Eur Heart J 2006;27:1223-9. [Crossref] [PubMed]

- Wein W, Khamene A, Clevert DA, et al. Simulation and fully automatic multimodal registration of medical ultrasound. Med Image Comput Comput Assist Interv 2007;10:136-43. [PubMed]

- Cleary K, Zhang H, Glossop N, et al. Electromagnetic tracking for image-guided abdominal procedures: overall system and technical issues. Conf Proc IEEE Eng Med Biol Soc 2005;7:6748-53. [PubMed]

- Zhong H, Kanade T, Schwartzman D. Sensor guided ablation procedure of left atrial endocardium. Med Image Comput Comput Assist Interv 2005;8:1-8. [PubMed]

- Dong J, Calkins H, Solomon SB, et al. Integrated electroanatomic mapping with three-dimensional computed tomographic images for real-time guided ablations. Circulation 2006;113:186-94. Erratum in: Circulation 2006;114:e509. [Crossref] [PubMed]

- Enquobahrie A, Cheng P, Gary K, et al. The image-guided surgery toolkit IGSTK: an open source C++ software toolkit. J Digit Imaging 2007;20:21-33. [Crossref] [PubMed]

- Mountney P, Stoyanov D, Davison A, et al. Simultaneous stereoscope localization and soft-tissue mapping for minimal invasive surgery. Med Image Comput Comput Assist Interv 2006;9:347-54. [PubMed]

- Grasa OG, Civera J, Güemes A, et al. EKF Monocular SLAM 3D Modeling, Measuring and Augmented Reality from Endoscope Image Sequences. In: 5th Workshop on Augmented Environments for Medical Imaging including Augmented Reality in Computer-Aided Surgery, held in conjunction with MICCAI, 2009.

- Noonan DP, Mountney P, Elson DS, et al. A stereoscopic fibroscope for camera motion and 3D depth recovery during Minimally Invasive Surgery. IEEE International Conference on Robotics and Automation. May 12-17; Kobe, Japan. IEEE, 2009.

- Koolwal AB, Barbagli F, Carlson C, et al. An Ultrasound-based Localization Algorithm for Catheter Ablation Guidance in the Left Atrium. Int J Robot Res 2010;29:643-65. [Crossref]

- Garcıa O, Civera J, Gueme A, et al. Real-time 3D Modeling from Endoscope Image Sequences. International Conference on Robotics and Automation Workshop on Advanced Sensing and Sensor Integration in Medical Robotics. May 13; Kobe, Japan. 2009.

- Koppel D, Chen CI, Wang YF, et al. Toward automated model building from video in computer-assisted diagnoses in colonoscopy. In: Proceedings Volume 6509, Medical Imaging 2007: Visualization and Image-Guided Procedures. San Diego, CA, United States. 2007.

- Wu CH, Sun YN, Chang CC. Three-Dimensional Modeling From Endoscopic Video Using Geometric Constraints Via Feature Positioning. IEEE Transactions on Biomedical Engineering 2007;54:1199-211. [Crossref] [PubMed]

- Burschka D, Li M, Ishii M, et al. Scale-invariant registration of monocular endoscopic images to CT-scans for sinus surgery. Med Image Anal 2005;9:413-26. [Crossref] [PubMed]

- Mountney P, Yang GZ. Motion compensated SLAM for image guided surgery. Med Image Comput Comput Assist Interv 2010;13:496-504. [PubMed]

- Hu M, Penney GP, Rueckert D, et al. Non-rigid reconstruction of the beating heart surface for minimally invasive cardiac surgery. Med Image Comput Comput Assist Interv 2009;12:34-42. [PubMed]

- Stoyanov D, Mylonas GP, Deligianni F, et al. Soft-tissue motion tracking and structure estimation for robotic assisted MIS procedures. Med Image Comput Comput Assist Interv 2005;8:139-46. [PubMed]

Cite this article as: Scaradozzi D, Zingaretti S, Ferrari A. Simultaneous localization and mapping (SLAM) robotics techniques: a possible application in surgery. Shanghai Chest 2018;2:5.